Greetings everyone, and a meaningful Hùng Kings’ Commemoration Day (Giỗ Tổ Hùng Vương)! Here in Vietnam the day is a significant day where we honor the legendary founding fathers of our nation. Reflecting on the legacy of the Hùng Kings reminds me of the incredible grit, passion, perseverance, and vision required to build something lasting – qualities of leadership that laid the very foundations of our country. It’s a profound inspiration, and I aspire to cultivate even a fraction of that dedication and foresight in my own projects and goals.

Fittingly, this important public holiday grants some welcome downtime. While my usual rhythm is about one blog post per week or two week, I felt inspired by the spirit of the day – that sense of building and creation – to use this special occasion and the free time it provides to kick off a project I’m truly passionate about: documenting my own adventure in building a home data center from the ground up just as promised from the previous post.

Like many tech enthusiasts, I’ve been drawn to the idea for various reasons, but rising cloud costs have become a major catalyst for me recently. It really hit home when I realized that just three months of cloud service fees for a moderately powerful instance could easily match, or even exceed, the cost of buying a decent second-hand desktop or utilizing some of the perfectly capable hardware I already have lying around.

Couple that with the fact that I have a stable internet connection here in Vietnam and possess the technical skills to manage my own systems – I generally know what I’m doing! Beyond the potential cost savings and leveraging existing resources, this presents an excellent opportunity to dive deeper and learn even more.

But what exactly is a ‘home data center’ in this context? For me, it doesn’t necessarily mean rows of humming servers in a dedicated, climate-controlled room (though maybe one day!). It can start much smaller, maybe with just a single machine, focusing on specific goals. This series, starting with today’s “Day -1” planning post, will chronicle that journey.

Table of Contents

The Allure: Why Build a Home Data Center?

So, beyond my specific trigger of cloud costs and having some hardware on hand, what are the broader attractions of committing to building a home data center? Why deliberately introduce more complex systems, blinking lights, and the associated considerations like power and cooling into our homes? For me, and many technically-driven individuals across Vietnam and globally, the ‘why’ boils down to several key, compelling areas:

A Fertile Ground for Tech Skills & Agile Practices:

This is often a primary driver. It’s an unparalleled environment for getting truly hands-on with enterprise-level technologies like Kubernetes (k8s), configuring network storage solutions (perhaps using NFS…), mastering networking, exploring automation, and more. It’s also the perfect place to practice agile methodologies: build small, test, learn, iterate, and improve your setup piece by piece.

Enhanced Data Privacy and Control (A Key Factor for Many):

For many, a home data center offers significantly enhanced data privacy and control compared to relying solely on public clouds. Hosting critical information or services yourself means you define security policies, control access, and ensure data sovereignty, providing peace of mind hard to achieve otherwise.

Cost-Effective 24/7 Operation & Optimized Home Internet Use:

Once operational, the primary ongoing costs are electricity and your existing internet connection. Especially here in Vietnam, abundant residential bandwidth is common. This capacity is ideal for self-hosting. Furthermore, modern tools like Cloudflare Tunnel or similar proxy/tunneling services can optimize this connection, allowing secure external access to your services even without a static IP or opening risky inbound firewall ports. These tools effectively bypass common ISP limitations while often adding a layer of security (like DDoS protection) and potentially improving perceived performance for external users by leveraging their global network. Running your own systems uses power and bandwidth you likely already have, optimized with smart tools.

Customization and Efficient Scaling As You Need It:

Your own data center offers near-limitless flexibility, starting small (maybe two computers) and growing. The key advantage is inherent scalability, precisely when and how you need it. Incrementally add compute (like a Kubernetes cluster), storage (scaling an NFS server), or networking resources only as your projects demand. This ‘just-in-time’ scaling avoids waste and unnecessary cost, offering high efficiency. It’s also the ultimate safe sandbox for experimentation.

Granular Security Control and Implementation:

Building your own infrastructure grants complete control over your security posture, going far beyond basic ISP router settings. You can design and implement multi-layered defenses: configure powerful firewalls (pfSense/OPNsense) exactly as needed, enforce strict network segmentation (VLANs), manage granular access controls, and deploy specialized security monitoring tools. Technologies like the aforementioned Cloudflare Tunnel not only simplify secure connectivity but also act as a protective layer, obscuring your home IP address and shielding services from direct internet exposure. You determine your acceptable risk level and engineer the appropriate mitigations.

The Intrinsic Challenge and Satisfaction:

Finally, designing, building, and operating even a modest home data center – especially integrating tools like Kubernetes, NFS, and implementing robust, custom security measures – presents a deeply engaging intellectual challenge. Successfully managing your own sophisticated tech ecosystem brings profound satisfaction.

These motivating factors – hands-on learning, potential privacy gains, cost-effective operation leveraging home internet smartly, enhanced security control, and the ability to start small and scale efficiently only as needed – paint an exciting picture. However, this ambition must be balanced with a clear-eyed view of the complexities and potential hurdles involved… which brings us squarely to the reality check.

The Reality Check: Costs, Challenges, and Considerations

Alright, the allure is strong, the potential for learning and customization is vast, and the thought of running powerful services from home is exciting. But before we get carried away mentally racking servers, it’s absolutely essential to inject a significant dose of reality. Building and operating a home data center, even a small one, isn’t trivial. There are tangible costs, complexities, and practical hurdles that need careful consideration. Ignoring these can lead quickly to frustration, abandoned projects, unexpected bills, and maybe even some domestic friction. Let’s break down the major considerations – the potential “cons”:

The Financial Investment (Upfront and Ongoing):

Let’s be clear: while potentially cheaper than the cloud long-term for some uses, this isn’t necessarily a low-cost hobby, especially initially.

- Upfront Hardware Costs: Even starting small requires capital. You’ll need compute resources (servers, mini-PCs, capable older laptops, or SBCs), storage (HDDs/SSDs), networking gear (switches, cables , …), and power protection. My plan leverages laptop batteries and my apartment’s secondary backup power outlet to defer the immediate need for a separate UPS, though investment in the core hardware still applies.

- Ongoing Electricity Bills: This remains a key factor. Even energy-efficient hardware running 24/7 will consume power and contribute to the monthly electricity bill. It’s an operational expense (OpEx) that needs to be budgeted realistically. (Using low-power SBCs or laptops helps manage this, as noted below).

Significant Time Commitment and Technical Complexity:

This is far from a “plug-and-play” setup. Be prepared to invest considerable time and effort in setup (OS, Kubernetes, NFS, networking, security) and continuous maintenance (patching, updates, backups, troubleshooting). This requires an ongoing, regular time commitment.

The Physical Realities: Noise, Heat, and Space:

Your digital infrastructure has a physical footprint with tangible side effects.

- Noise: Server fans can be loud. Using laptops or modern SBCs (like Orange Pi 5) can significantly mitigate this, often being silent or very quiet. Location planning still remains important regardless.

- Heat: All electronics generate heat. Laptops and even powerful SBCs under load are no exception, though generally less than traditional servers. Adequate ventilation is crucial to ensure hardware longevity and stability.

- Space: You need a dedicated physical location with good airflow and access for maintenance, even if using relatively compact laptops or tiny SBCs.

Infrastructure Dependencies: Power Stability and Network Nuances:

- Stable Power Delivery: Having laptop batteries protects against brief dips/surges/switchover times, and the apartment’s backup power outlet offers resilience against longer outages. However, ensure the circuit’s capacity can handle the load.

- Networking Challenges: Home internet upload speed can be a bottleneck. Managing your internal network adds complexity. Tools like Cloudflare Tunnel help but require management.

The Security Burden Falls Entirely On You:

This cannot be overstated. You are solely responsible for securing everything – firewalls, patching, secure configurations, monitoring. Security is a continuous, active effort.

The Household Harmony Factor (WAF/PAF):

Finally, don’t underestimate the ‘Wife Acceptance Factor’ or ‘Partner / Family Acceptance Factor’. Even if you mitigate some technical challenges, the project still impacts your household. The persistent noise (even if minimized), the extra heat radiating from the equipment, the physical space consumed, the noticeable impact on the electricity bill, and the hours you might spend troubleshooting or tinkering instead of participating in other activities – these are all real considerations for the people you live with.

Let me share a personal cautionary tale to illustrate this vividly. In my initial burst of enthusiasm, thinking mainly of convenience, I decided a corner of our bedroom seemed like a perfectly logical spot to set up a small network switch and one or two of the first machines. This seemed fine during the day.

However, once nighttime arrived and the main lights went out, that corner transformed into an impromptu, unwanted light show. The rhythmic blinking of the network switch’s green LEDs, the steady glow of power lights on the laptops, the occasional frantic flicker of disk activity – it pierced the darkness relentlessly. My wife, after trying very patiently (for a while) to sleep despite what must have felt like a mini airport runway activating in the room, made her feelings extraordinarily clear (thankfully, no physical kicks were involved, but the message was just as impactful!). Sleep was impossible with that constant visual noise. The equipment was banished the very next morning.

The lesson was crystal clear and learned the hard way: compute gear, especially anything running 24/7 with indicator lights, needs its own dedicated, non-intrusive space, far away from shared relaxation or sleeping areas. Beyond just location, open communication about the project’s scope, potential impacts (like the power bill!), and time commitment is crucial before you start deploying gear. Setting expectations and finding compromises are absolutely vital for long-term project success and domestic peace!

Facing these realities, including leveraging mitigations like backup power, laptop batteries, and potentially energy-efficient SBCs, ensures you proceed with informed awareness. Understanding these challenges helps in planning effectively, which leads us to the how…

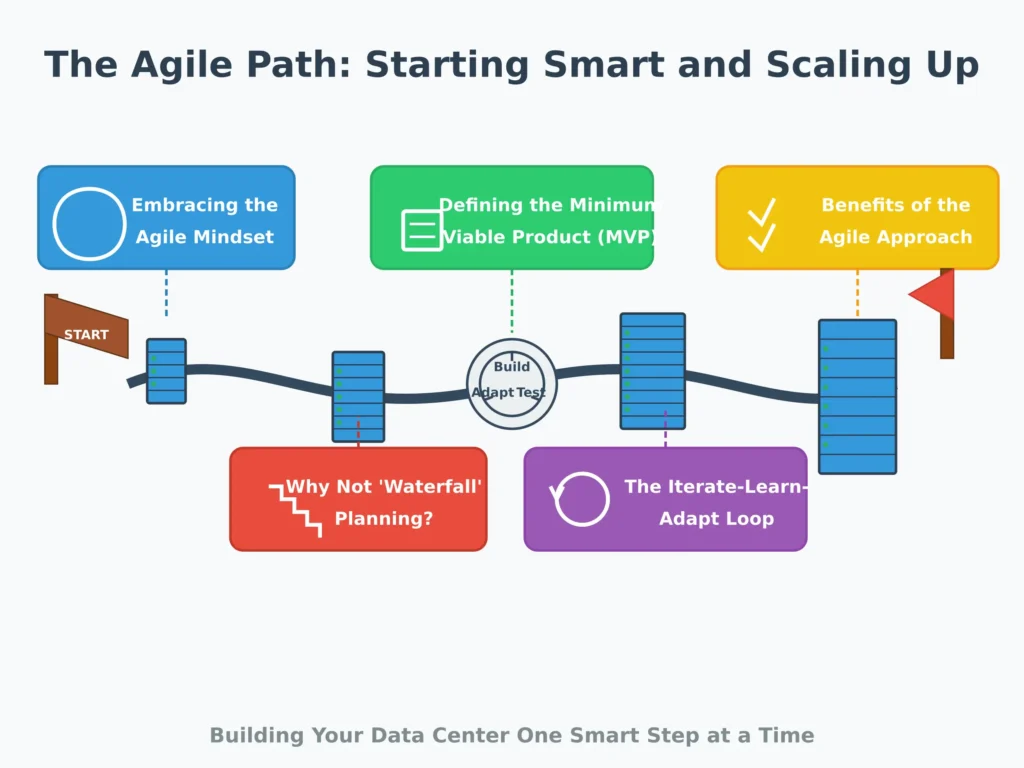

The Agile Path: Starting Smart and Scaling Up

Okay, we’ve explored the exciting potential (“The Allure”) and acknowledged the significant challenges (“The Reality Check”). So, how do we bridge the gap and actually embark on this home data center journey without getting completely overwhelmed or going broke? For me, the most sensible and effective strategy is to adopt an Agile mindset.

Embracing the Agile Mindset

Now, when I say Agile here, I’m not necessarily talking about imposing rigid Scrum frameworks or daily stand-up meetings on a personal project. I mean embracing the core philosophy: start small, build incrementally, learn constantly from feedback (both from the system and yourself), and adapt your plans based on real-world experience. It prioritizes progress and learning over achieving a perfect, predefined end-state from day one.

Why Not ‘Waterfall’ Planning?

Trying to map out every single component, configuration, and service of your “ultimate” home data center before you even begin (a traditional ‘waterfall’ approach) is often counterproductive for this kind of project. Technologies evolve rapidly (Kubernetes is a prime example!), your own interests might shift as you learn, unexpected hurdles (like discovering certain hardware is much louder than anticipated, or the infamous bedroom-light incident!) will inevitably arise, and personal budgets and time are finite. A detailed, rigid master plan created in isolation is brittle and likely to fail or cause unnecessary stress.

Defining the Minimum Viable Product (MVP)

Instead, the key is to define a Minimum Viable Product (MVP) for your very first iteration. Ask yourself honestly: “What is the absolute simplest thing I can build right now that delivers some specific, tangible value or achieves one core learning objective?” Forget all the cool ‘nice-to-have’ features for a moment. What is the essential building block, the kernel of your project, for Iteration Zero?

Perhaps your initial MVP isn’t deploying a complex application on a multi-node Kubernetes cluster. Maybe it’s simply:

- Setting up one laptop as a reliable NFS server and confirming another machine can successfully mount and use that storage.

- Or, installing a lightweight Kubernetes distribution (like K3s or MicroK8s) on a single laptop or SBC (like that Orange Pi) and getting the dashboard running.

- Or, even just deploying your first simple containerized application using Docker Compose on one machine.

The Iterate-Learn-Adapt Loop

Once you have that small, tightly-scoped MVP defined, you enter the iterative loop:

- Build It: Focus only on implementing that specific MVP. Resist the urge to add extra features (‘scope creep‘) at this stage.

- Use / Test It: Get it running. Interact with it. Does it perform as expected? Is it stable?

- Learn From It: This is crucial. What challenges did you encounter during setup? What configuration choices caused problems? What performance bottlenecks did you notice? What did you learn about the specific technologies involved (e.g., intricacies of NFS permissions, Kubernetes networking concepts, container resource limits)?

- Adapt & Plan Next: Based directly on what you learned, decide on the next small, manageable increment. Perhaps it’s improving the stability or security of the current MVP. Maybe it’s deploying a second, slightly more complex application. Maybe it’s adding a second node to your K3s cluster. Or perhaps you learned your initial approach was flawed, and you need to adapt and try a different storage solution before proceeding.

Benefits of the Agile Approach

Adopting this Agile, iterative approach directly addresses many of the challenges outlined in the Reality Check:

- Manages Cost: You acquire hardware and software incrementally, spreading the cost over time and only buying what you need for the next confirmed step.

- Reduces Complexity: You tackle the project in smaller, more understandable chunks, avoiding the overwhelm of trying to configure everything at once.

- Accelerates Meaningful Learning: You get hands-on experience much faster. Mistakes are made on a smaller scale, making them less costly and easier to learn from. Theory meets practice quickly.

- Increases Motivation: Successfully completing small iterations provides tangible progress and a sense of accomplishment, keeping you engaged.

- Provides Flexibility: If your needs change, or you discover a better technology (e.g., switching from NFS to something else for Kubernetes storage later on), you can pivot far more easily than if you were locked into a massive upfront plan.

Thinking Agile transforms the potentially daunting task of “building a home data center” into an enjoyable, manageable series of learning adventures. It puts the focus on the journey and continuous improvement. But even before you build that very first MVP, there’s one final piece of essential preparation: Day -1 Planning. We will go over putting this Agile approach into practice and defining that initial MVP build in the ‘Day 0’ post of this series.

Day -1 Planning: Assessing Feasibility Before You Begin

We’ve explored the motivations (“The Allure”), faced the potential challenges (“The Reality Check”), and settled on an Agile approach to navigate the complexities (“The Agile Path”). Now, we arrive at perhaps the most critical step before you format that first SSD, plug in that network cable, or type that first apt install command: the Day -1 Planning phase. This is where we ground our enthusiasm and ideas in reality, translating ambition into a concrete, achievable starting point.

Skipping this ‘homework’ phase is incredibly tempting when you’re eager to start tinkering, but doing so is often the fastest route to wasted time, misspent money, and project abandonment. Thorough Day -1 planning helps ensure your initial actions align directly with your actual goals and constraints. It sets realistic expectations for yourself (and potentially others in your household) and critically informs the definition of that first Minimum Viable Product (MVP) required by our Agile approach. Think of it as drawing the map before starting the journey. Here’s what to consider:

Get Crystal Clear on Your Initial Goals (But Keep it Fun!):

What do you really want to achieve with your first iteration? Aim for goals that are specific, measurable, achievable, and relevant. But forget rigid deadlines – this isn’t a work project! Iteration takes time, troubleshooting takes unexpected detours, and learning happens at its own pace. The absolute priority is to keep it fun and engaging, just like the enjoyment I’ve found planning this out today! Don’t add unnecessary stress. Focus instead on clear, achievable technical objectives, tackled at a comfortable pace. Write them down! Examples: ‘Goal: Set up a single-node K3s cluster…’, ‘Goal: Configure Laptop A as an NFS server…’, ‘Goal: Install and configure Pi-hole…’. These specific technical goals dictate your immediate requirements.

Honestly Assess Your Resources (The “What”):

What do you realistically have available right now to achieve those initial goals?

- Budget: Define upfront spending tolerance and estimate ongoing electricity cost comfort level.

- Time: Be brutally honest – how many hours per week can you consistently dedicate without stress?

- Skills: Assess current knowledge vs. initial goal needs. Confirm willingness to learn patiently.

- Existing Gear: Catalog precisely what you have (laptops, SBCs, drives, etc.) and if it’s suitable initially.

Evaluate Your Physical and Network Environment (The “Where”):

Where will this initial setup physically live, and what infrastructure supports it?

- Space: Confirm your chosen spot. Check ventilation, noise tolerance, and accessibility.

- Power: Double-check outlet availability (main and backup). Understand circuit limits. Consider backup outlet reliability.

- Networking: Plan connectivity (wired preferred). Check router proximity and internet upload speed

Define Your Starting Point (The First Realistic MVP):

Now, synthesize all the above. Based on your specific initial technical goals (1), constrained realistically by your available resources (2), and considering your physical and network environment (3), what is the most logical, achievable first step? Documenting this specific MVP definition becomes the primary objective leading into “Day 0”. For example: “My Day 0 MVP target is: Install Ubuntu Server 22.04 on Laptop A…, configure NFS…, ensure Laptop B… can mount it…, verify read/write.”

One Final, Crucial Preparation: Embrace the Possibility of Failure.

After considering all these practical points, there’s one crucial mental preparation essential for Day -1: be prepared for the possibility that things might not work out. Yes, despite meticulous planning, parts of this project – perhaps even the entire initial vision – might stumble, break, or simply prove too complex or costly. Hardware fails, configurations fight back, interests evolve.

But this is where I draw inspiration from entrepreneurs I admire, like Sir Richard Branson. A recurring theme often attributed to him suggests that even if you fail, even if you fall flat on your face, as long as you learn valuable lessons from the attempt and, importantly, can still laugh or find enjoyment in the process, then the effort itself was worthwhile. So, while we plan diligently, let’s also commit to embracing the journey itself – the inevitable challenges, the unexpected problems, and the invaluable learning that comes regardless of whether we achieve the original ‘end goal’. In a personal project like this, the process, the fun, and the learning can absolutely justify the entire endeavor, win or lose.

Completing this Day -1 assessment, including mentally preparing for bumps in the road, provides a solid foundation. It turns vague intentions into a concrete, realistic initial plan, significantly boosting your chances of making meaningful progress early on and avoiding common pitfalls and frustrations. With this crucial groundwork laid, we’ll be well-prepared to actually start building in Day 0.

Conclusion: Groundwork Laid, Ready for Day 0

And that brings us to the end of this inaugural “Day -1” post in the Chronicles of a Home Data Center series! It felt fitting to use the quiet reflection afforded by the Hùng Kings’ Commemoration Day here in Vietnam to map out these crucial first thoughts.

We’ve journeyed together today from the initial spark of enthusiasm – exploring the compelling reasons (“The Allure”) why building a home data center is so attractive – through the necessary and sobering dose of reality, acknowledging the costs, complexities, and potential pitfalls (“The Reality Check”). We then charted a course forward, embracing an “Agile Path” focused on starting small, iterating, and learning. Finally, we landed on the practical “Day -1 Planning” – the essential homework of defining goals, assessing resources, evaluating our environment, and crucially, adopting a mindset that values the learning journey, even embracing the possibility of failure.

If there’s one key takeaway from this “Day -1” deep dive, it’s the immense value of this preparation phase. Taking the time before diving into hardware and software to think critically about the why, the what, the where, and the how – and tempering ambition with realism – lays a much stronger foundation for success and, just as importantly, for enjoyment. It’s about starting smart.

With this groundwork conceptually laid out, I’m genuinely excited (and perhaps slightly apprehensive!) about the next stage. In the upcoming “Day 0” post of this series, I’ll translate this planning into action. I’ll share the specific Minimum Viable Product (MVP) I’ve defined for my initial build based on the Day -1 assessment, and we’ll take the first concrete steps together – likely involving setting up the operating system on the first piece of hardware and starting configuration.

What are your thoughts on this pre-planning phase? Are you embarking on a similar home data center or home lab journey? What are your main motivations or biggest concerns after reading this? Did I miss any critical Day -1 considerations? I’d love to hear your experiences, insights, and any questions you might have in the comments below. Let’s learn and build together!